Our book, Hands-On Large Language Models, Is Now Out!

We would love to know what you think!

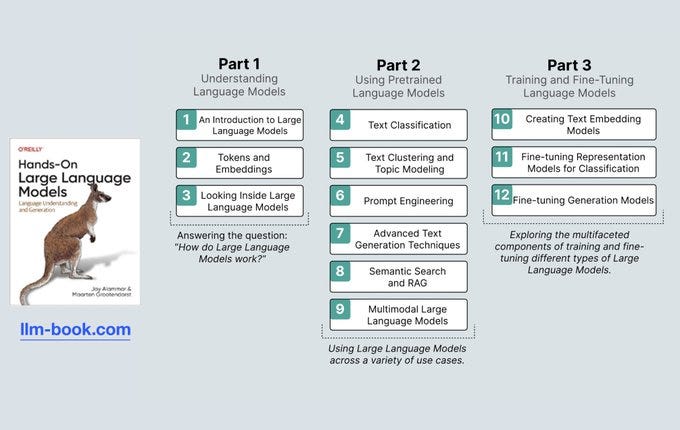

About 18 months since starting this wild project, we are now happy to put LLM-book.com in your hands. It is available on Amazon and O’Reilly. In India, it’s available via Shroff. It stands at about 425 pages with 300 original figures in glorious full-color explaining hundreds of the main intuitions behind building and using LLMs.

All the code examples are available on Github here (1.7K stars so far! Wild!). Maarten and I chose a small model so you can run all the examples on the free Colab instances.

We are floored by the initial reception, Andrew Ng called it “a valuable resource for anyone looking to understand the main techniques behind how large language models are built". Josh Starmer, maker of StatQuest, said "I can't think of another book that is more important to read right now. On every single page, I learned something that is critical to success in this era of language models".

Content Overview

The book is broken down into three parts. Part 1 explains how large language models work. This includes an updated, expanded, and modernized version of The Illustrated Transformer explaining 2024-era transformers. Part 2 is application focused and each chapter addresses a type of use case. Part 3 is for the more advanced users who want to fine-tune models (representation or generation).

Chapter 1 Overview

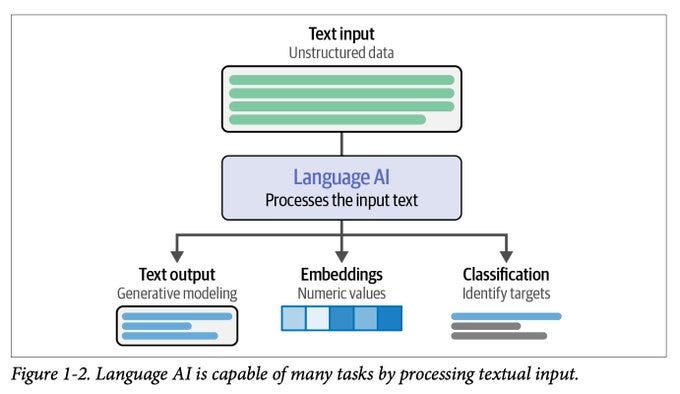

Chapter 1 paves the way for understanding LLMs by providing a history and overview of the concepts involved. A central concept the general public should know is that language models are not merely text generators, but that they can form other systems (embedding, classification) that are useful for problem solving.

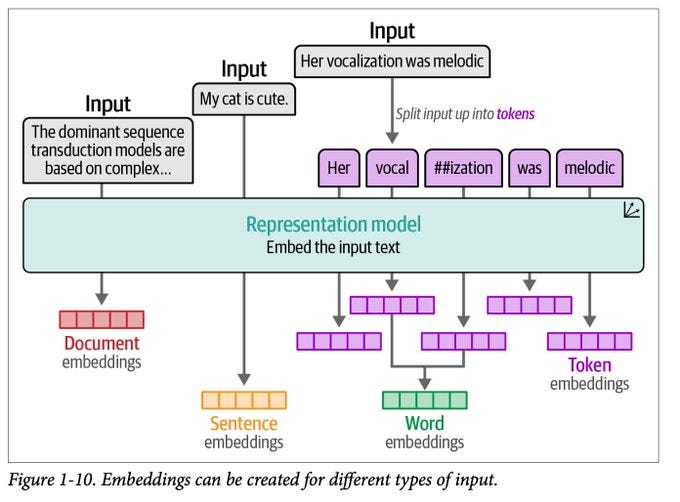

Embeddings are numeric representations that capture the meaning of text -- at the level of a document, sentence, word, or token.

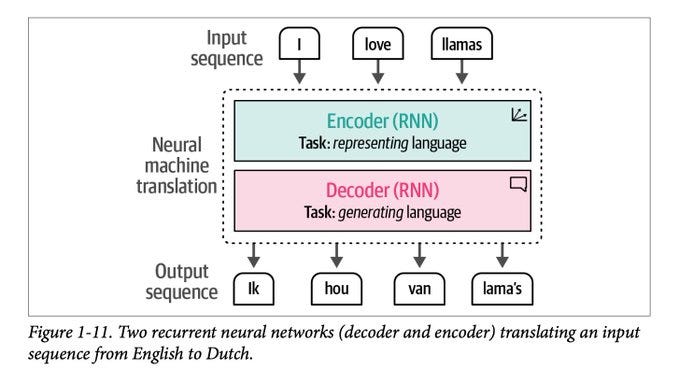

Prior to Transformers, Encoder-Decoder RNNs led the way in text generation and translation

Throughout the book, we color-code language models as either representation models (green with a vector icon at the top right) or generative models (pink with a speech bubble icon). These figures start establishing that distinction. It becomes more important later on.

Chapter 2 Overview

Chapter 2: Tokens and Embeddings lays the foundation to understanding LLMs by breaking down two of their fundamental concepts.

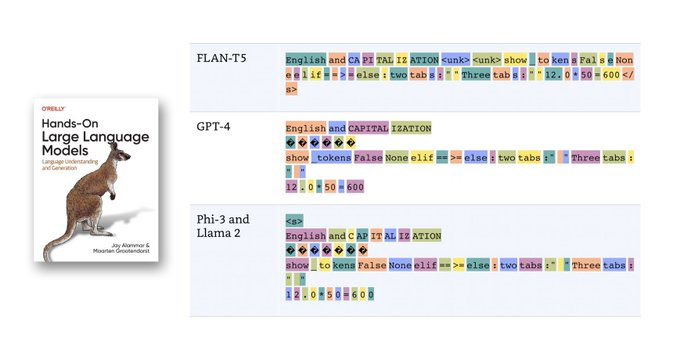

For more advanced readers, we show nuances of tokenization by comparing how different LLMs tokenize a certain string, showing their sensitivity to unicode, multi-linguality, code, numbers...etc -- all things that impact their performance.

The code for visualizing these tokenizations is in the chapter 2 notebook here. You can use it to visualize other models, as long their tokenizers are on the HuggingFace hub. These two concepts open the door to plenty of clever solutions that go beyond text LLMs. The chapter then goes into one of these examples of using them for music recommendation systems (treating songs as tokens and playlists as sentences).

Stay Tuned!

More chapter overviews to come in future posts. I hope you are able to get your hands on it and that you let us know what your experience was like going through it! (Oh and once you’re done, we’d really appreciate if you’re able to review it on the platform you purchased it from or on Goodreads).

Happy reading!

Jay